Openshift

Openshift¶

We have to support multiple (but not so many) Openshift/OCP 4.x clusters , deployed at various places and deployed differently, based on some rules/constraints,etc.

What is common is that we need official subscription, and managed centrally, from https://console.redhat.com/openshift (see inventory for credentials and/or internal emails)

AWS¶

deployment¶

To deploy in AWS, one can use the adhoc-deploy-ocp-aws playbook. But you need first to read all the current documentation to understand what is needed Before running the playbook, you'll need :

- Configure needed Route53 public zone for the ocp sub-domain (and have delegation working)

- needed aws access key and secret for IAM role able to create VPC, update route53, deploy EC2 instances , etc (see doc)

- get official subscription/pull secret from https://console.redhat.com/openshift

- prepare your ansible group with all needed variables

Warning

Be sure to review the VPC settings/subnets that openshift-install binary will create once running and ensure no overlap

management¶

Depending on the env, the ocp-admin-node can be used to :

- deploy/replace TLS certificates

- backup etcd nodes data on daily basis

- configure openshift for some settings (see role)

- add/remove projects/groups/namespaces

authentication¶

We don't want to use the internal auth but instead rely on FAS/ACO so the first thing can be done is to tie openshift to ipsilon (id.centos.org (or id.stg.centos.org).

This is configured by the ocp-admin-role but it needs some variables first, so create the ocp cluster client id at the ipsilon side (admin operation) and then update the ansible invetory for all the ocp_idp_ variables.

Once applied through the role, openshift will allow to login through FAS/ACO.

Worth knowing that the ocp_fas_sync boolean lets you also automatically create projects/groups/namespaces/RBACs for the groups starting with ocp_fas_group_prefix (for example for OCP ci, the IPA/FAS groups are all prefixed ocp-cico-)

Storage for PersistentVolumes¶

OCP deployed on AWS by default can use EBS volumes but it would come with limitation. So one can use EFS as centralized storage solution for PersistentVolumes (and our playbook can use that feature).

Warning

if you want to ensure that PVC would use the created PVs on top of EFS, instead of EBS, you should disable the gp2 StorageClass (under Storage/StorageClasses, edit and storageclass.kubernetes.io/is-default-class: 'false')

Specific ocp CI cluster notes¶

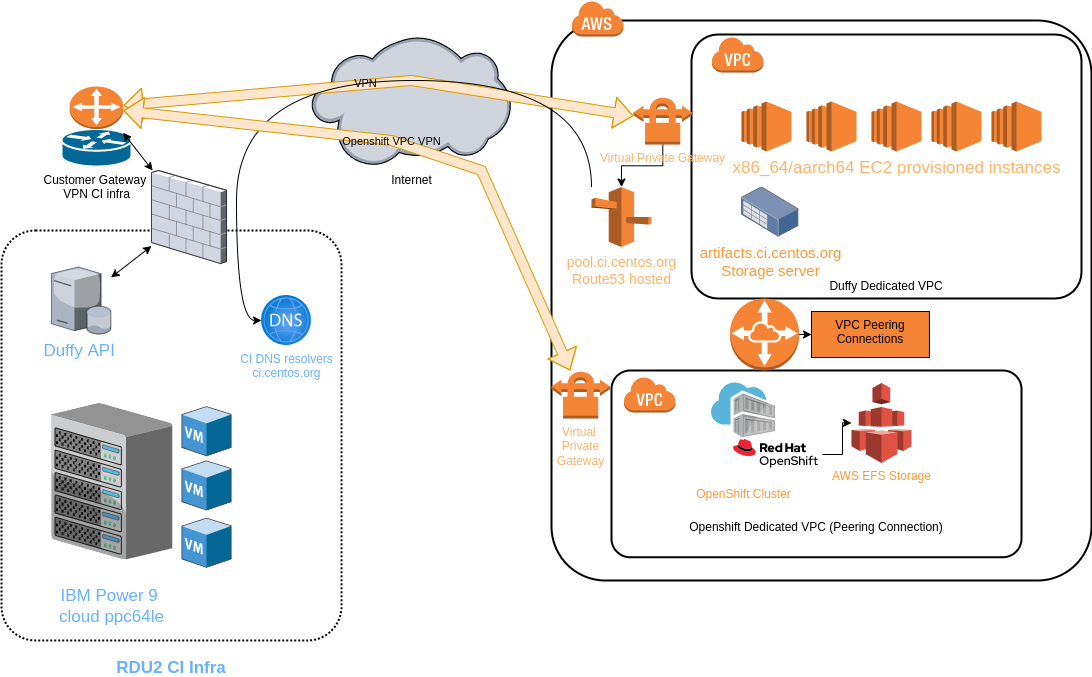

Here is an an overview of the deployed ocp ci cluster :

Apart from the FAS/auth/EFS volumes, it's worth knowing that :

- we use VPC peering between the Duffy and OCP VPCs (see ansible inventory for details)

- we use internal Route53 resolvers to forward queries to specific name servers

- we use Site-to-Site VPN connections between VPCs and on-premises DC (see ansible inventory for ec2gw nodes)

- security group is attached to EFS to allow NFS traffic from OCP nodes (and so worker nodes able to mount NFS PVs)